14 Mar Recall in Machine Learning

Let’s dive into Recall, another key evaluation metric for classification models. Recall is particularly important when the cost of false negatives is high. It helps answer the question: “Of all the actual positive cases, how many did the model correctly predict?”

What is Recall in Machine Learning

Recall measures the proportion of true positive predictions (correctly predicted positive cases) out of all actual positive cases (both true positives and false negatives). It focuses on the model’s ability to identify all positive cases.

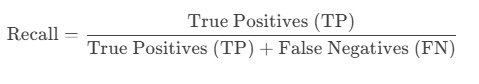

Formula of Recall

Recall is calculated using the following formula:

Where:

- True Positives (TP): Correctly predicted positive cases.

- False Negatives (FN): Incorrectly predicted negative cases (missed positives).

When to Use Recall

Recall is a critical metric when:

- False Negatives are Costly:

Example: In medical diagnosis, missing a disease (false negative) is much more costly than a false alarm (false positive). - Focus on Identifying All Positives:

When the goal is to identify as many positive cases as possible, even if it means some false positives. - Imbalanced Datasets:

When the positive class is rare, recall helps ensure that the model doesn’t miss too many positive cases.

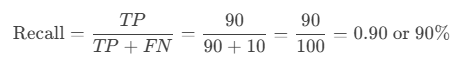

Example of Recall

Let’s say you have a binary classification problem where you’re predicting whether a patient has a disease (Positive) or not (Negative). After evaluating your model, you get the following results:

- True Positives (TP): 90 (patients with the disease correctly identified).

- False Negatives (FN): 10 (patients with the disease incorrectly identified as healthy).

Using the formula:

This means that 90% of the patients with the disease were correctly identified.

Advantages of Recall

- Focus on Positive Cases:

- Recall ensures that the model identifies as many positive cases as possible, which is critical in applications where false negatives are costly.

- Useful for Imbalanced Datasets:

- When the positive class is rare, recall helps evaluate how well the model identifies the positive class without being overwhelmed by the majority class.

Limitations of Recall

While recall is useful, it has some limitations:

- Ignores False Positives:

- Recall doesn’t account for false positives (incorrectly predicted positive cases). A model with high recall might have many false positives.

- Trade-off with Precision:

- Increasing recall often reduces precision (the accuracy of positive predictions), and vice versa. This is known as the precision-recall trade-off.

When Not to Use Recall

Avoid using recall when:

- False Positives are Costly:

- If false positives are more costly than false negatives, recall alone is not sufficient. Use precision or F1-score instead.

- Balanced Classes:

- If the dataset is balanced and both false positives and false negatives are equally important, accuracy might be a better metric.

Recall in Context: Precision-Recall Trade-off

Recall is often evaluated alongside precision (the accuracy of positive predictions). There’s a trade-off between the two:

- High Recall: Fewer false negatives, but more false positives.

- High Precision: Fewer false positives, but more false negatives.

The choice between recall and precision depends on the problem:

- High Recall: Important in medical diagnosis, where false negatives (missing a disease) are costly.

- High Precision: Important in spam detection, where false positives (flagging legitimate emails as spam) are costly.

Hands-On Example of Recall

Let’s calculate recall using Python and Scikit-learn:

from sklearn.metrics import recall_score

# True labels

y_true = [0, 1, 1, 0, 1, 0, 0, 1, 1, 0] # 0 = Healthy, 1 = Disease

# Predicted labels

y_pred = [0, 1, 0, 0, 1, 0, 1, 1, 1, 0] # Model's predictions

# Calculate recall

recall = recall_score(y_true, y_pred)

print(f"Recall: {recall * 100:.2f}%")

Output

Recall: 80.00%

Key Takeaways

- Recall measures the proportion of true positive predictions out of all actual positive cases.

- It’s a critical metric when false negatives are costly (e.g., medical diagnosis, fraud detection).

- Recall is often evaluated alongside precision, and there’s a trade-off between the two.

- Use recall when the focus is on identifying as many positive cases as possible.

If you liked the tutorial, spread the word and share the link and our website Studyopedia with others.

For Videos, Join Our YouTube Channel: Join Now

Read More:

- NLP Tutorial

- Generative AI Tutorial

- Machine Learning Tutorial

- Deep Learning Tutorial

- Ollama Tutorial

- Retrieval Augmented Generation (RAG) Tutorial

- Copilot Tutorial

- Gemini Tutorial

- ChatGPT Tutorial

No Comments