14 Mar Model Evaluation Terminologies

Let us understand the Model Evaluation terminologies to familiarize ourselves with the key terms and concepts used in model evaluation. This will allow us to understand and interpret evaluation metrics effectively. Let us see the key terminologies:

True Positives (TP), True Negatives (TN), False Positives (FP), False Negatives (FN)

These are the building blocks of the confusion matrix and are used to describe the outcomes of predictions in a classification problem.

- True Positives (TP): The model correctly predicted the positive class.

- Example: A spam email is correctly identified as spam.

- True Negatives (TN): The model correctly predicted the negative class.

- Example: A legitimate email is correctly identified as not spam.

- False Positives (FP): The model incorrectly predicted the positive class.

- Example: A legitimate email is incorrectly flagged as spam.

- False Negatives (FN): The model incorrectly predicted the negative class.

- Example: A spam email is incorrectly identified as legitimate.

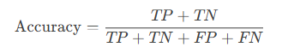

Accuracy

- Definition: The proportion of correct predictions (both true positives and true negatives) out of all predictions.

- Formula:

- Use Case: Useful when the classes are balanced.

- Limitation: This can be misleading in imbalanced datasets (e.g., 95% accuracy in a dataset where 95% of the samples are negative).

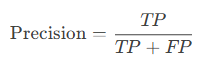

Precision

- Definition: The proportion of true positives out of all predicted positives.

- Formula:

- Use Case: Important when the cost of false positives is high (e.g., in spam detection, where flagging a legitimate email as spam is costly).

- Interpretation: High precision means fewer false positives.

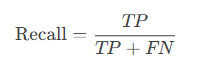

Recall (Sensitivity)

- Definition: The proportion of true positives out of all actual positives.

- Formula:

- Use Case: Important when the cost of false negatives is high (e.g., in medical diagnosis, where missing a disease is costly).

- Interpretation: High recall means fewer false negatives.

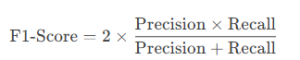

F1-Score

- Definition: The harmonic mean of precision and recall, providing a balance between the two.

- Formula:

- Use Case: Useful when you need a single metric that balances precision and recall, especially in imbalanced datasets.

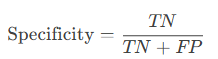

Specificity

- Definition: The proportion of true negatives out of all actual negatives.

- Formula:

- Use Case: Important when the cost of false positives is high (e.g., in fraud detection, where incorrectly flagging a transaction as fraudulent is costly).

ROC Curve and AUC

- ROC Curve (Receiver Operating Characteristic Curve):

- A plot of the true positive rate (recall) against the false positive rate (1 – specificity) at various threshold settings.

- AUC (Area Under the Curve):

- A single metric that summarizes the ROC curve. AUC ranges from 0 to 1, where 1 indicates perfect performance.

- Use Case: Useful for comparing models and selecting the best threshold for classification.

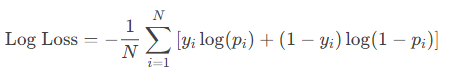

Log Loss (Logarithmic Loss)

- Definition: A metric that measures the performance of a classification model where the prediction is a probability value between 0 and 1.

- Formula:

where yiyi is the actual label and pipi is the predicted probability.

- Use Case: Useful for evaluating models that output probabilities (e.g., logistic regression).

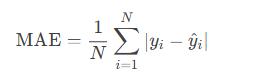

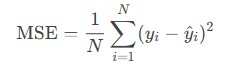

Mean Absolute Error (MAE) and Mean Squared Error (MSE)

- MAE: The average of the absolute differences between predicted and actual values.

- MSE: The average of the squared differences between predicted and actual values.

- Use Case: Commonly used for regression problems.

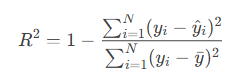

R-squared (Coefficient of Determination)

- Definition: A metric that indicates the proportion of the variance in the dependent variable that is predictable from the independent variables.

- Formula:

where yˉyˉ is the mean of the actual values.

- Use Case: Useful for evaluating the goodness of fit in regression models.

If you liked the tutorial, spread the word and share the link and our website Studyopedia with others.

For Videos, Join Our YouTube Channel: Join Now

Read More:

- NLP Tutorial

- Generative AI Tutorial

- Machine Learning Tutorial

- Deep Learning Tutorial

- Ollama Tutorial

- Retrieval Augmented Generation (RAG) Tutorial

- Copilot Tutorial

- Gemini Tutorial

- ChatGPT Tutorial

No Comments