03 Mar Install the Tokenizers Hugging Face library

In this lesson, learn how to install the Tokenizers library developed by Hugging Face. The Tokenizers library is a fast and efficient library for tokenizing text, which is often used alongside the Transformers library. It is a fast, efficient, and flexible library designed for tokenizing text data, which is a crucial step in natural language processing (NLP).

Before moving further, we’ve prepared a video tutorial to learn the Tokenizers library and install it:

Install the Tokenizers library

To install tokenizers, use the pip:

pip install tokenizers

Install the Tokenizers library on Google Colab

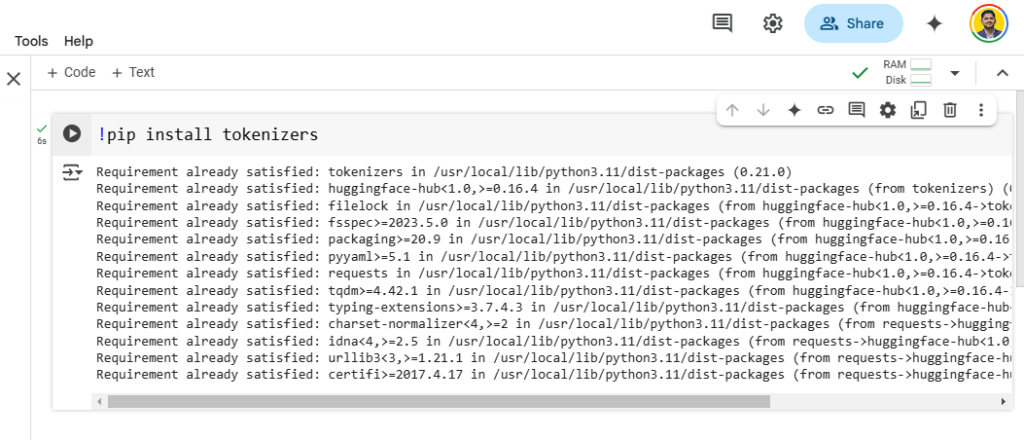

To install the tokenizers library on Google Colab, use the following command:

!pip install tokenizers

We typed the above command, and installed the Tokenizers library successfully on Google Colab:

Install the Tokenizers library from the Hugging Face GitHub repository

If you want to install the latest development version of the libraries directly from the Hugging Face GitHub repository, you can do so using the following commands:

pip install git+https://github.com/huggingface/tokenizers

The git+https://github.com/huggingface/tokenizers tells pip to install the package from the Hugging Face Tokenizers repository on GitHub.

If you liked the tutorial, spread the word and share the link and our website Studyopedia with others.

For Videos, Join Our YouTube Channel: Join Now

Read More:

- RAG Tutorial

- Generative AI Tutorial

- Machine Learning Tutorial

- Deep Learning Tutorial

- Ollama Tutorial

- Retrieval Augmented Generation (RAG) Tutorial

- Copilot Tutorial

- ChatGPT Tutorial

No Comments