14 Mar F1 Score in Machine Learning

Let’s dive into the F1 Score, a critical evaluation metric for classification models. The F1 Score is particularly useful when you need to balance precision and recall, especially in scenarios where both false positives and false negatives are important.

What is the F1 Score in Machine Learning

The F1 Score is the harmonic mean of precision and recall. It provides a single metric that balances the trade-off between precision (accuracy of positive predictions) and recall (ability to identify all positive cases).

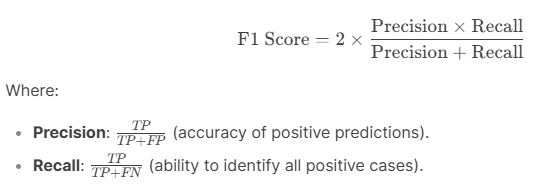

Formula of F1 Score

The F1 Score is calculated using the following formula:

When to Use the F1 Score

The F1 Score is a critical metric when:

- Both False Positives and False Negatives are Important:

- Example: In medical diagnosis, both false positives (incorrectly diagnosing a healthy patient) and false negatives (missing a disease) are costly.

- Imbalanced Datasets:

- When the dataset is imbalanced, and the positive class is rare, the F1 Score provides a better measure of performance than accuracy.

- Trade-off Between Precision and Recall:

- When you need to balance precision and recall, rather than optimizing for one at the expense of the other.

Example of F1 Score

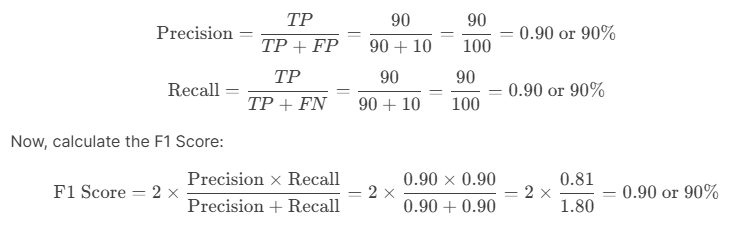

Let’s say you have a binary classification problem where you’re predicting whether a patient has a disease (Positive) or not (Negative). After evaluating your model, you get the following results:

- True Positives (TP): 90 (patients with the disease correctly identified).

- False Positives (FP): 10 (healthy patients incorrectly identified as having the disease).

- False Negatives (FN): 10 (patients with the disease incorrectly identified as healthy).

First, calculate precision and recall:

This means the F1 Score is 90%, indicating a good balance between precision and recall.

Advantages of the F1 Score

- Balances Precision and Recall:

- The F1 Score provides a single metric that balances the trade-off between precision and recall.

- Useful for Imbalanced Datasets:

- It’s particularly useful when the positive class is rare, as it focuses on the performance of the positive class.

- Robust Metric:

- It’s less likely to be skewed by class imbalance compared to accuracy.

Limitations of the F1 Score

While the F1 Score is useful, it has some limitations:

- Ignores True Negatives:

- The F1 Score doesn’t account for true negatives, which might be important in some applications.

- Not Suitable for Multi-Class Problems:

- For multi-class classification, you might need to use metrics like macro-F1 or weighted-F1.

- Assumes Equal Importance of Precision and Recall:

- If precision and recall have different levels of importance for your problem, the F1 Score might not be the best metric.

When Not to Use the F1 Score

Avoid using the F1 Score when:

- True Negatives are Important:

- If true negatives are critical (e.g., in some anomaly detection tasks), consider using metrics like specificity or AUC-ROC.

- Precision or Recall is More Important:

- If one of precision or recall is more important than the other, you might want to optimize for that specific metric instead.

F1 Score in Context: Precision-Recall Trade-off

The F1 Score is particularly useful in scenarios where there’s a trade-off between precision and recall:

- High Precision, Low Recall: The model is very accurate in its positive predictions but misses many actual positive cases.

- High Recall, Low Precision: The model identifies most positive cases but has many false positives.

- Balanced Precision and Recall: The F1 Score ensures that both precision and recall are reasonably high.

Hands-On Example of F1 Score

Let’s calculate the F1 Score using Python and Scikit-learn:

from sklearn.metrics import f1_score

# True labels

y_true = [0, 1, 1, 0, 1, 0, 0, 1, 1, 0] # 0 = Healthy, 1 = Disease

# Predicted labels

y_pred = [0, 1, 0, 0, 1, 0, 1, 1, 1, 0] # Model's predictions

# Calculate F1 Score

f1 = f1_score(y_true, y_pred)

print(f"F1 Score: {f1 * 100:.2f}%")

Output

F1 Score: 80.00%

Key Takeaways

- The F1 Score is the harmonic mean of precision and recall, providing a balance between the two.

- It’s a critical metric when both false positives and false negatives are important (e.g., medical diagnosis, fraud detection).

- It’s particularly useful for imbalanced datasets, where the positive class is rare.

- Use the F1 Score when you need to balance precision and recall, rather than optimizing for one at the expense of the other.

If you liked the tutorial, spread the word and share the link and our website Studyopedia with others.

For Videos, Join Our YouTube Channel: Join Now

Read More:

- NLP Tutorial

- Generative AI Tutorial

- Machine Learning Tutorial

- Deep Learning Tutorial

- Ollama Tutorial

- Retrieval Augmented Generation (RAG) Tutorial

- Copilot Tutorial

- Gemini Tutorial

- ChatGPT Tutorial

No Comments