05 Oct Features of Deep Learning

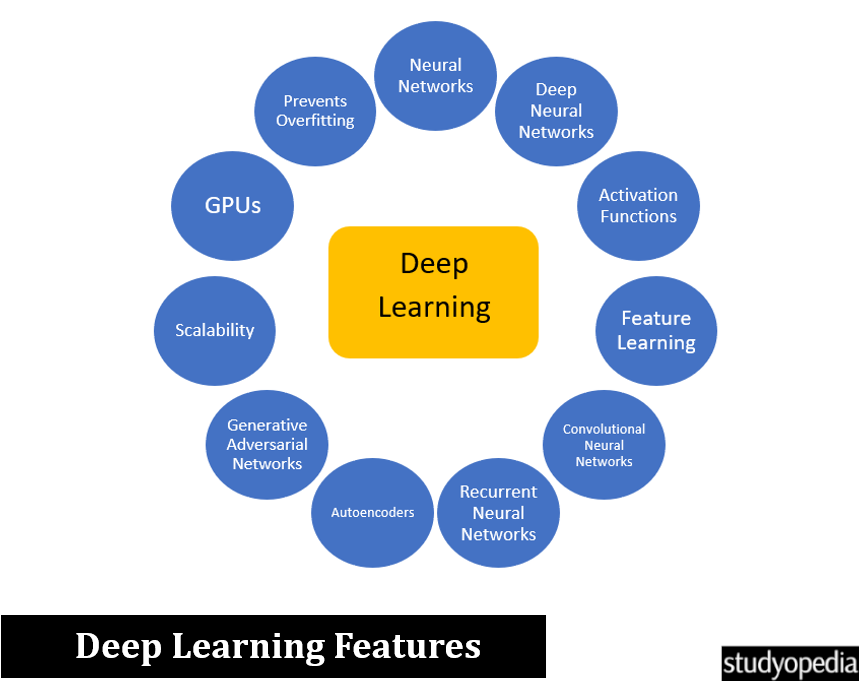

Let us see the features of Deep Learning. It is a subset of Machine Learning and is useful in solving complex problems. One of the most trending topics, Generative AI is also a part of Deep Learning:

- Neural Networks: Neural Networks are the backbone of Deep Learning. It is a series of algorithms that attempt to recognize underlying re0 Neural networks inspired by biological neurons.

- Deep Neural Networks (DNNs): Deep Neural Networks (DNNs) have multiple hidden layers between the input and output layers. The “deep” in deep neural networks refers to the depth, i.e., the number of layers, which can range from a few to hundreds. DLL is a type of artificial neural network.

- Activation Functions: Activation functions determine whether a neuron should be activated or not by introducing non-linearity into the model. This enabled it to learn complex patterns. It is a part of neural networks in deep learning.

- Feature Learning: Feature learning includes automatically discovering and extracting the relevant features or representations needed for a task straight from raw data. Feature Learning is also known as representation learning,

- Convolutional Neural Networks (CNNs): Convolutional Neural Networks (CNNs) are used for image and video processing. They mimic how the human brain processes visual information using layers of convolutional filters. This is done to detect various features in images. CNNs are a type of deep learning model.

- Recurrent Neural Networks (RNNs): Recurrent Neural Networks (RNNs) were designed for processing sequential data. RNNs are a class of neural networks.

- Autoencoders: Autoencoders are used to learn efficient representations of data, mostly for dimensionality reduction or feature learning. They have an encoder and a decoder. Autoencoders are a type of neural network.

- Generative Adversarial Networks (GANs): Generative Adversarial Networks (GANs) are a class of machine learning frameworks designed for unsupervised learning. GANs consist of two neural networks, a generator, and a discriminator.

- Scalability: Deep learning models can handle large volumes of data and complex architectures.

- GPUs: Deep learning takes advantage of modern GPUs (Graphics Processing Units) for parallel computation. This speeds up the training process.

- Prevents Overfit: Regularization techniques like dropout, batch normalization, and weight regularization help prevent overfitting, improving model performance on unseen data.

If you liked the tutorial, spread the word and share the link and our website Studyopedia with others.

For Videos, Join Our YouTube Channel: Join Now

Read More:

No Comments