07 Oct Recurrent Neural Networks (RNNs)

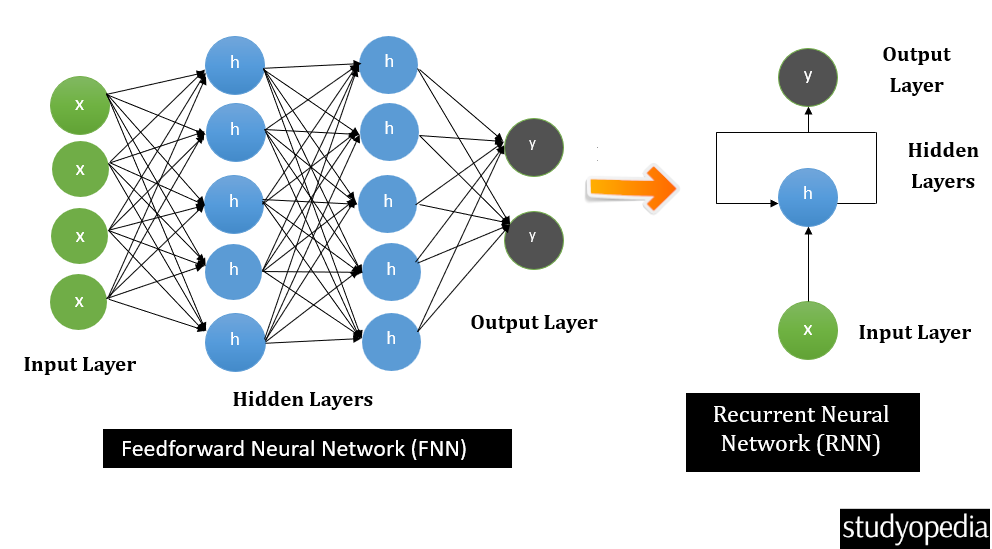

Recurrent Neural Networks (RNNs) are a type of neural network that can process sequential data, unlike the FNN. It can also memorize and capture previous inputs because it has connections that form direct cycles. RNN is best suited for tasks where time and order matter, such as speech recognition, weather forecasting, video captioning, etc. RNNs were launched to solve some issues of Feedforward Neural Networks (FNNs):

- FNN cannot handle sequential data

- FNN cannot memorize previous inputs

- FNN considers only the previous input

RNN includes a hidden layer that remembers the previous input. Let’s say you need to predict the next word of a sentence. For this, you need the last words, correct? Therefore, RNN originated and fixed this using a hidden layer.

Architecture of Recurrent Neural Networks (RNN)

Based on what we learned above, let us now see the architecture of RNN. Here, we have also shown the FNN, and how it can be converted to RNN.

In RNN, a part of the previous output is fed in with the inputs for the given time.

Above, x is the input layer, h is the hidden layer, and y is the output layer.

Applications of Recurrent Neural Networks

- Natural Language Processing: Text generation, translation, and sentiment analysis.

- Language Translation: RNN translates the input into different languages as output, such as a person is speaking in English. Translate it into Spanish, Chinese, German, etc.

- Speech Recognition: Converts spoken words into written text

- Video Analysis: Activity recognition and video captioning.

- Time Series Prediction: Stock market prediction, weather forecasting.

- Music Composition: To generate melodies based on patterns.

Advantages of Recurrent Neural Networks

The following are the advantages of RNN:

- RNNs are designed to handle sequential data, making them ideal for tasks where time and order matter.

- Preserves contextual information across time steps.

- For each input, RNN uses the same parameter. Performs a similar task on all inputs or hidden layers to produce the output. This eventually decreases the complexity of parameters.

Disadvantages of Recurrent Neural Networks

The following are the disadvantages of RNN:

- Training RNNs can be computationally expensive,

- RNNs are harder to parallelize since they are sequential. This leads to longer training times compared to models like CNNs.

- RNNs struggle with very long sequences

- Overfit can easily occur in RNN. This can happen with small datasets or if regularization techniques are not used properly.

Types of Recurrent Neural Networks

The following are the types of RNN:

- One-to-one Recurrent Neural Networks

- One-to-many Recurrent Neural Networks

- Many-to-one Recurrent Neural Networks

- Many-to-many Recurrent Neural Networks

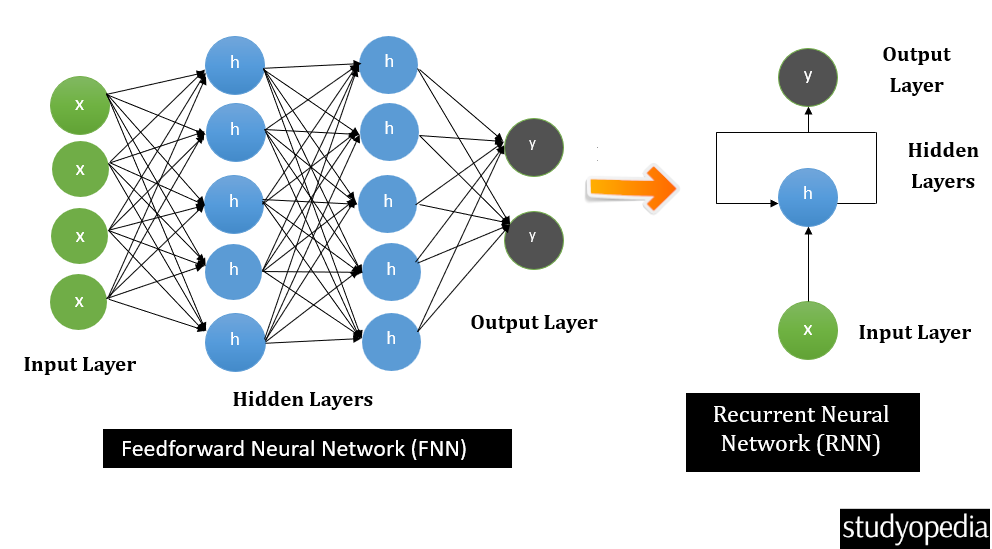

One-to-one RNN

It is also known as the Vanilla Neural Network. The number of inputs and outputs are the same i.e. one input and one output. For example: Stock Prices are fed and the output is the predicted stock price for a period.

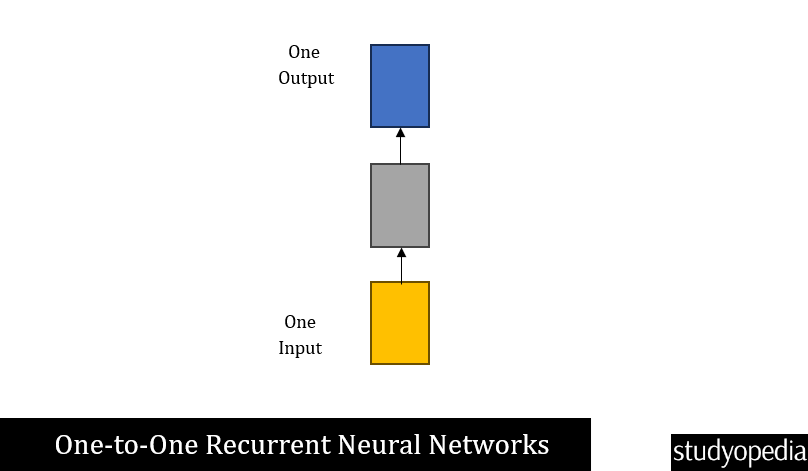

One-to-many RNN

In this, we have one input and many outputs. For example: Image captioning, where the image is the input and the sequence of words as the caption is the output.

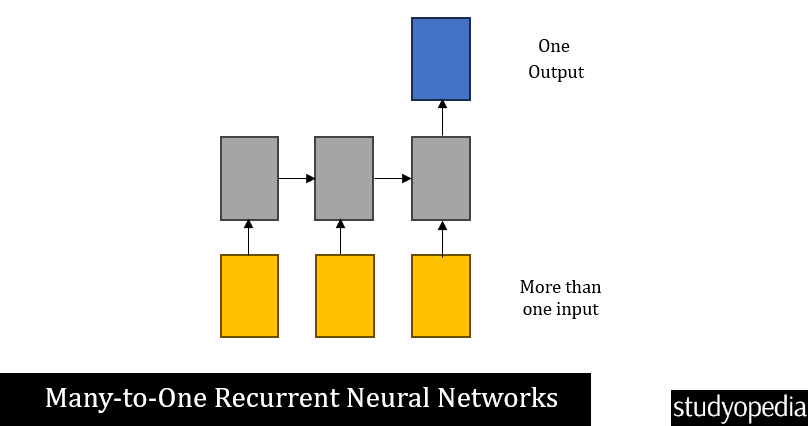

Many-to-one RNN

In this, more than one input is fed and a single output is generated. For example, sentiment analysis is where multiple words are input to get the sentiment in the form of a single word i.e. positive or negative.

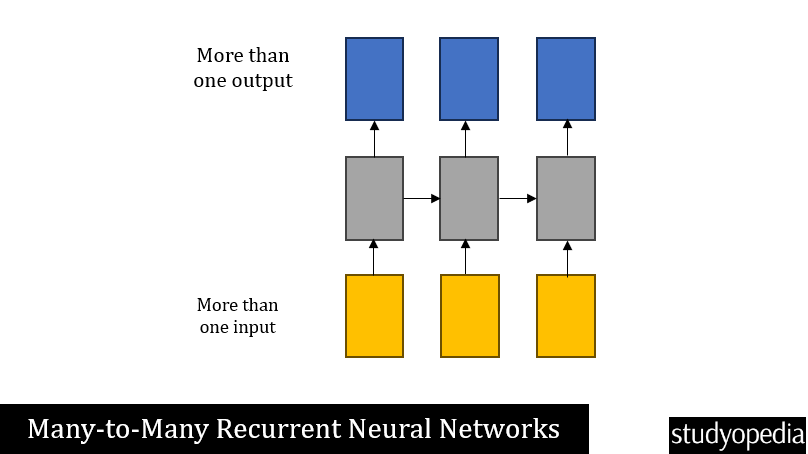

Many-to-many RNN

In this, we have multiple inputs and generate multiple outputs. For example: Language translation to translate some words in English to Spanish.

If you liked the tutorial, spread the word and share the link and our website Studyopedia with others.

For Videos, Join Our YouTube Channel: Join Now

Read More:

No Comments