07 Oct Autoencoders – Deep Learning

Autoencoders are mostly used for unsupervised learning as they do not require labeled data during the training phase. It is a type of neural network that was introduced to receive an input, compress, and reduce its dimensionality. They are also significant in tasks such as image compression and anomaly detection. Some applications include denoising images and audio signals.

Components of Autoencoders

The following are the components of Autoencoders:

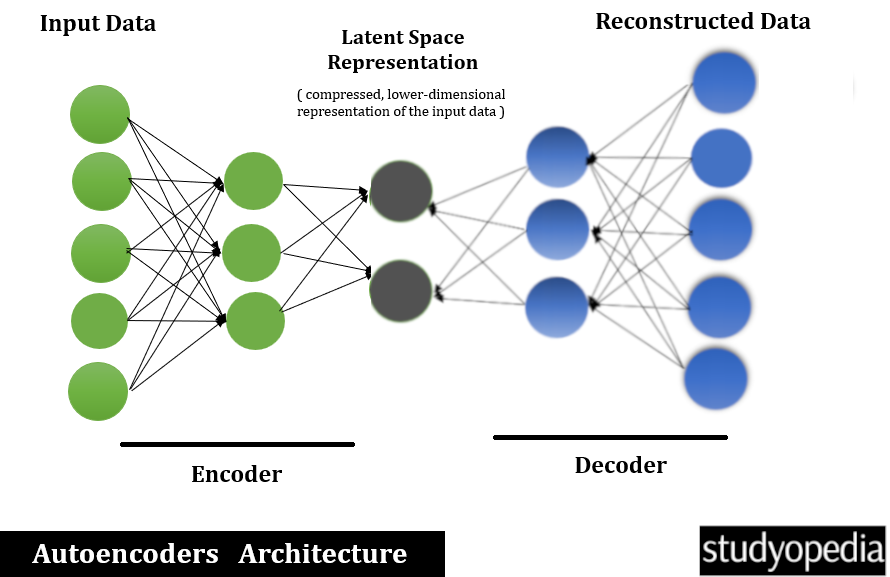

- Encoder: The encoder compresses the input data into a lower-dimensional representation, known as the latent space or bottleneck. This part of the network learns to capture the most essential features of the input data.

- Latent Space: The compressed, lower-dimensional representation of the input data. This space holds the key features needed to reconstruct the original data. Latent space representations can filter out noise, retaining only meaningful patterns.

- Decoder: The decoder constructs the original input data from the compressed representation. This part of the network learns to generate data from the latent space representation.

Process of Autoencoders

The following is the process of autoencoders:

- Input Layer: Receives the raw data (e.g., images, text).

- Encoder Layers: Compress the input data into a lower-dimensional representation. Lower-dimensional representations can be visualized more easily. It assists in understanding the structure of the data.

- Latent Space: The bottleneck layer where the data is represented in compressed form.

- Decoder Layers: Reconstruct the original data from the compressed representation.

- Output Layer: Produces the reconstructed data, ideally as close to the original as possible.

Architecture of Autoencoders

Now, let us see the architecture of autoencoders that includes encoding and decoding:

Applications of Autoencoders

The following are the applications of Autoencoders:

- Generate new images: Variational Autoencoders (VAEs) can generate new images by sampling from the learned latent space. Example: Creating new faces or artistic styles.

- Dimensionality Reduction: Autoencoders can reduce the number of features in a dataset, making it easier to visualize or process. Example: Reducing the dimensionality of image data for visualization or preprocessing before feeding into another model.

- Data Denoising: It can be trained to remove noise from data, effectively learning to reconstruct clean data from noisy inputs. Example: Denoising images or audio signals.

- Anomaly Detection: It can detect anomalies by learning to reconstruct normal data patterns. Anomalies, that deviate from these patterns, will have higher reconstruction errors. Example: Identifying fraudulent transactions in financial data.

Example: Image Denoising

Let us see an example of denoising an image with autoencoders:

- Input: Noisy image as input

- Encoder: Compresses the noisy image into a lower-dimensional representation.

- Latent Space: Contains the compressed representation.

- Decoder: Reconstructs a clean image from the latent space.

- Output: Denoised image.

Types of Autoencoders

The following are the types of Autoencoders:

- Standard Autoencoders: This is the basic form with an encoder and decoder.

- Sparse Autoencoders: Encourage sparsity in the hidden units to learn more meaningful features. These are controlled by changing the number of nodes at each hidden layer.

- Denoising Autoencoders: Train to remove noise from the input data.

- Variational Autoencoders (VAEs): Generate new data by sampling from a learned distribution.

If you liked the tutorial, spread the word and share the link and our website Studyopedia with others.

For Videos, Join Our YouTube Channel: Join Now

Read More:

- Generative Adversarial Network (GAN)

- Feedforward Neural Networks (FNN)

- Long Short Term Memory (LSTM)

- Convolutional Neural Network (CNN)

- Recurrent Neural Networks (RNN)

- What is Deep Learning

- Deep Learning – Features

- AI vs ML vs DL

- Deep Learning – Advantages and Disadvantages

- Deep Learning- Applications

- Deep Learning – Types

- Neural Network

No Comments